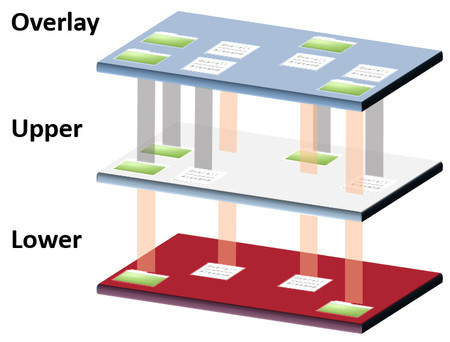

Returning to the world of blogging after nearly a month’s hiatus, I find myself intrigued by the concept of Overlay Filesystems. This article has piqued my curiosity, and I am convinced it will offer valuable insights for my future endeavors. [Read More…]

Pervasive Monitoring and Security in Africa

If you think about the number of attacks on the rise in the world, statistics and figures would prove you all. For example, if you think about preventing attacks such as Man-in-the-Middle attacks, guidance in implementing the right TLS Protocol, [Read More…]