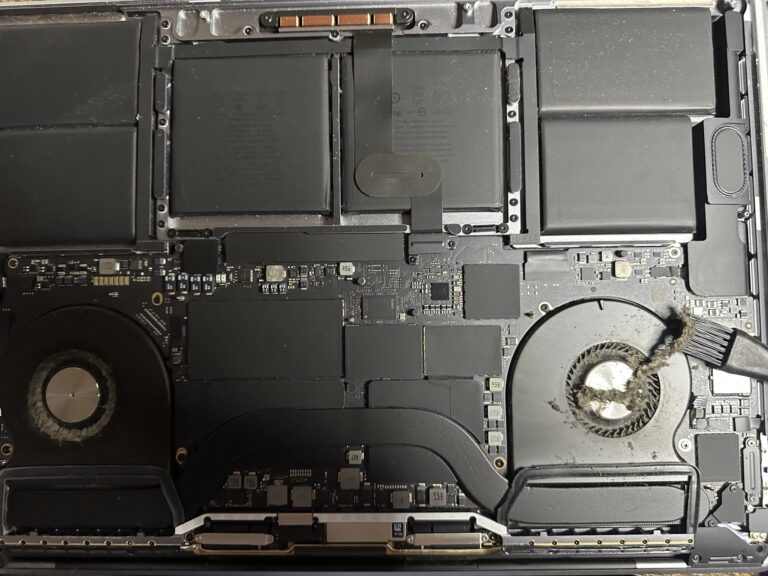

If you’ve been following my journey, you might know that I recently bought an M2 Max MacBook Pro. However, I also own a 2019 Intel MacBook Pro, which started to slow down significantly in recent days. Thankfully, I was able [Read More…]

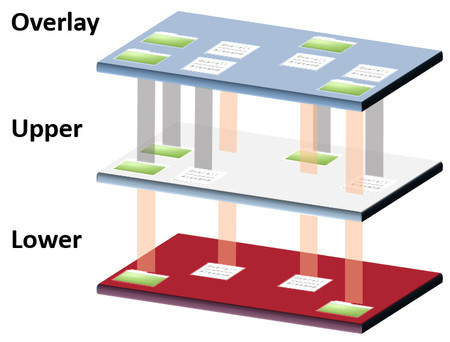

Getting started with Solace message broker

I have never heard of a Solace message broker, except for RabbitMQ which is used in VMware architecture. Well, I might come across some message brokers years back but did not interact enough. Since days, I was playing around with [Read More…]