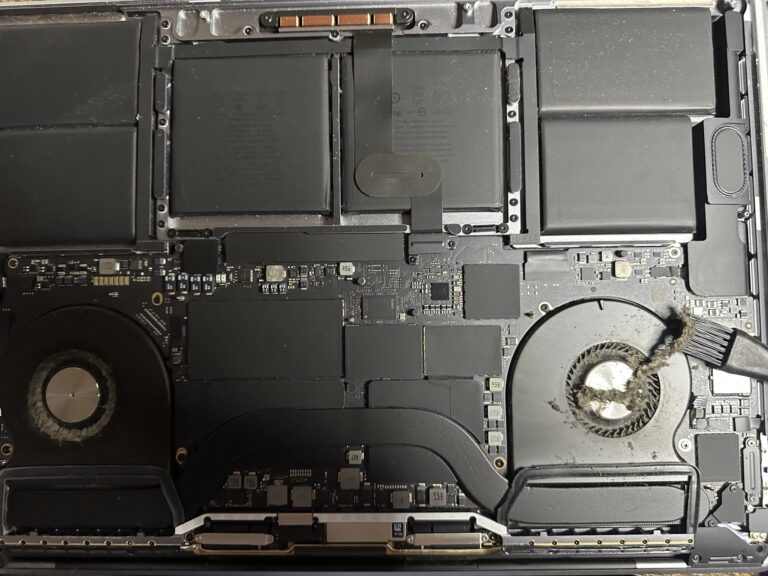

If you’ve been following my journey, you might know that I recently bought an M2 Max MacBook Pro. However, I also own a 2019 Intel MacBook Pro, which started to slow down significantly in recent days. Thankfully, I was able [Read More…]

Deploy AWS EC2 instances using Ansible

We have seen in the past how to use Terraform to deploy AWS EC2 instance. But, this is also possible using Ansible. In this blog post, we will focus on the deployment of AWS EC2 instance using Ansible. I assume [Read More…]