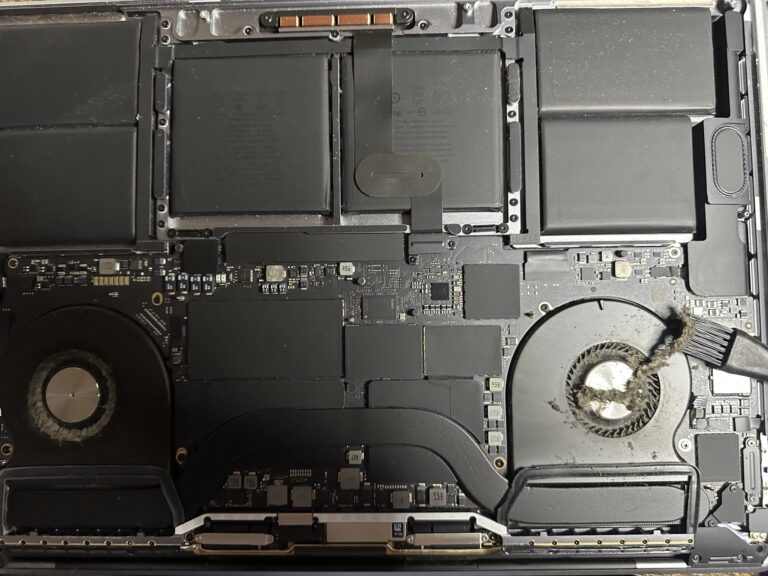

If you’ve been following my journey, you might know that I recently bought an M2 Max MacBook Pro. However, I also own a 2019 Intel MacBook Pro, which started to slow down significantly in recent days. Thankfully, I was able [Read More…]

Install Zabbix with MariaDB PHP7 HTTPD and on Centos7

When it comes to monitoring, one of the famous web application for monitoring is Zabbix. In this article, we will see the basic installation and configuration of a Zabbix machine on a CentOS7. Zabbix is an open-source monitoring software tool [Read More…]