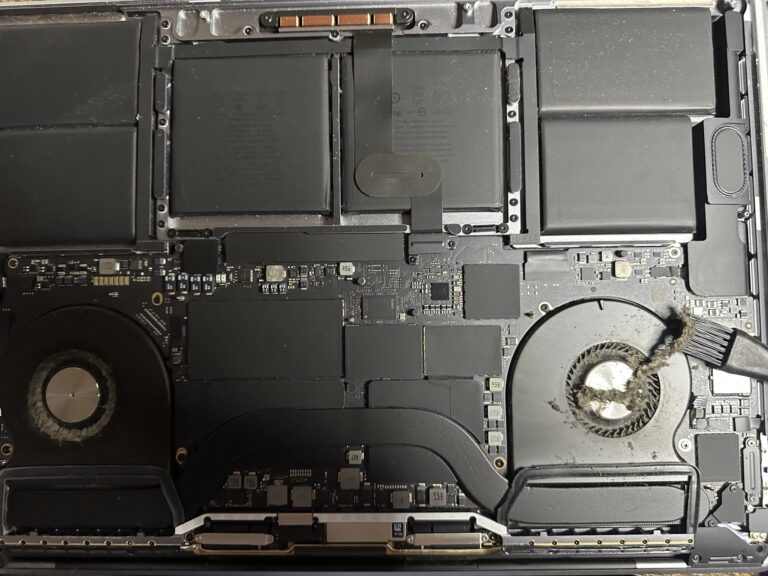

If you’ve been following my journey, you might know that I recently bought an M2 Max MacBook Pro. However, I also own a 2019 Intel MacBook Pro, which started to slow down significantly in recent days. Thankfully, I was able [Read More…]

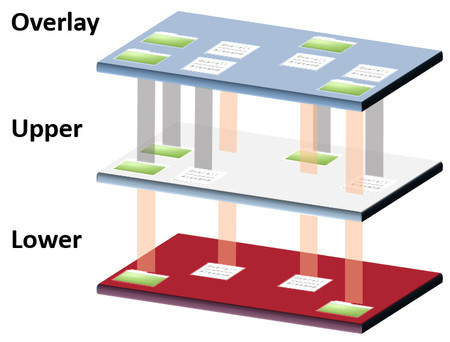

Puppet already installed ? What Next ? – Part 1

A few days back, we have seen the installation of the Puppet server and Puppet Agent on the RHEL7 environment. In this article, we will focus on the technical part to administer and write manifests in the Puppet server to [Read More…]