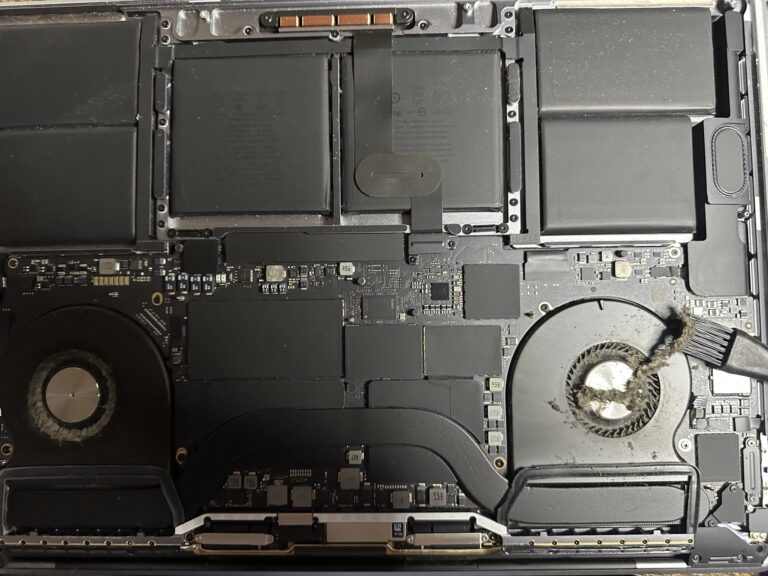

If you’ve been following my journey, you might know that I recently bought an M2 Max MacBook Pro. However, I also own a 2019 Intel MacBook Pro, which started to slow down significantly in recent days. Thankfully, I was able [Read More…]

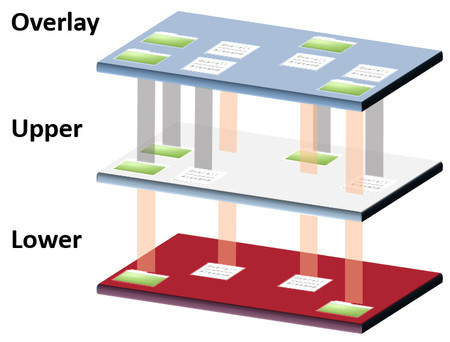

Web Security Fundamentals by Varonis

Some days back, i received an invitation to attend an online course by Varonis on Web Security Fundamentals which has been conducted by Troy Hunt. I should say though this course is for beginners, its worth watching and pretty interesting. [Read More…]