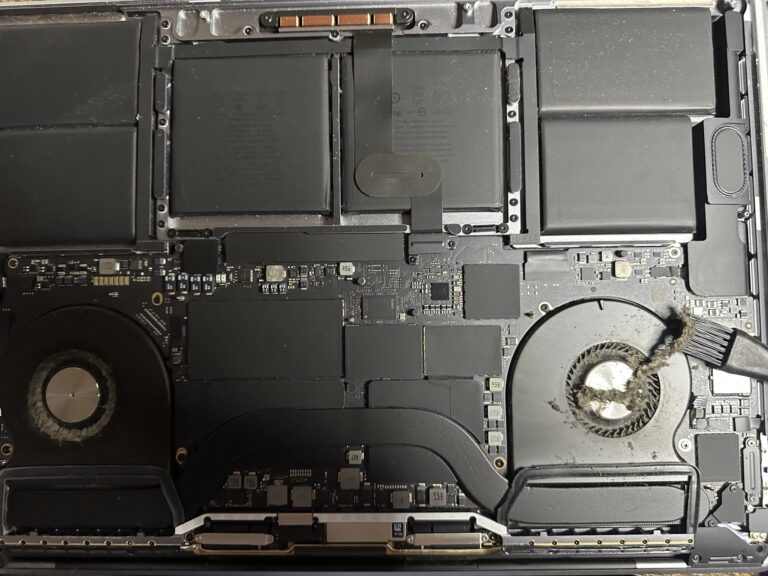

If you’ve been following my journey, you might know that I recently bought an M2 Max MacBook Pro. However, I also own a 2019 Intel MacBook Pro, which started to slow down significantly in recent days. Thankfully, I was able [Read More…]

A trip to a Wind Farm at Plaine Des Roches

This Sunday the 16th of April, I came across an interesting location in the North-East at Plaine Des Roches, Mauritius where electricity is produced through Wind Farms. The company is Quadran [2023 renamed quair ]which has invested in this environmental [Read More…]