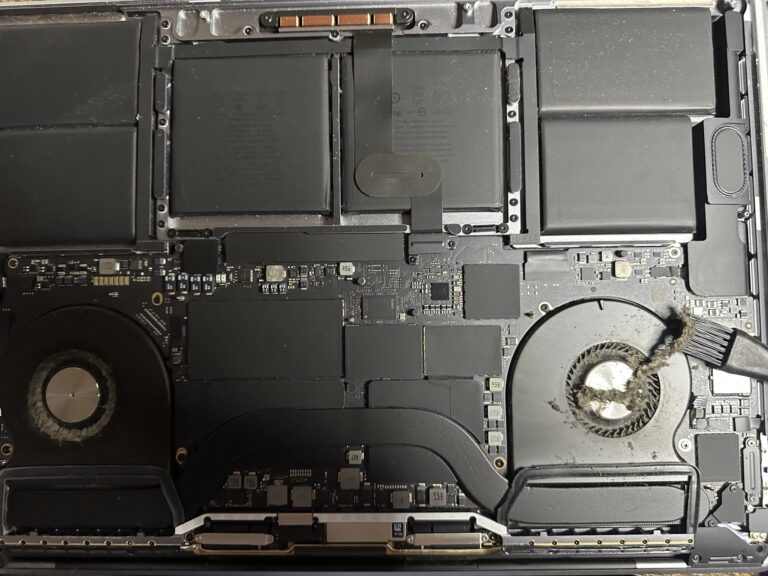

If you’ve been following my journey, you might know that I recently bought an M2 Max MacBook Pro. However, I also own a 2019 Intel MacBook Pro, which started to slow down significantly in recent days. Thankfully, I was able [Read More…]

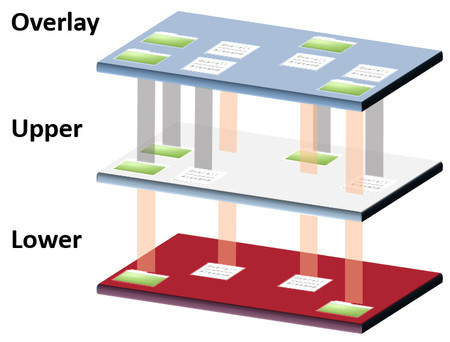

SAR command daily tips and tricks

As promised on Twitter days back, I would post some interesting tips and tricks using the SAR (System Activity Report) linux command. The sar command writes to standard output the contents of selected cumulative activity counters in the operating system. The [Read More…]