The third part of the Data Center Virtualization – Components of VMware vSphere consist of the following :

- Shared storage

- Storage protocols

- Data Stores

- Virtual SAN

- Virtual Volumes

Articles already published are :

- An introduction of Data Center Virtualization

- Components of VMware vSphere 6.0 – part 1 which include an overview of Vsphere 6.0, its architecture, Topology and configuration maximums.

- Components of VMware vSphere 6.0 – part 2 which include an introduction to vCenter servers and its features.

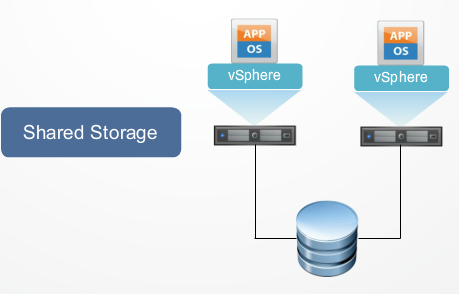

Shared Storage

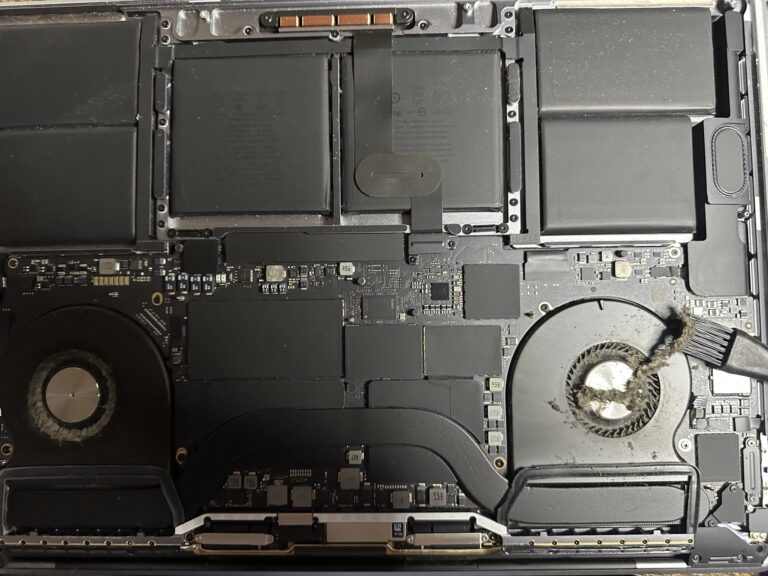

Shared storage consist of storage systems that your ESXi hosts use to store virtual machine files remotely. The hosts access the system over high speed storage network. It helps in operating VMs on different hosts. It allows multiple ESXi hosts to access the storage even if few hosts become unavailable.

Several of the vSphere features also require shared storage infrastructure to to work properly which include:

- DRS

- DPM

- Storage DRS

- High Availability

- Fault tolerance

Storage Protocols

vSphere supports the following storage protocols:

- Fibre channel – High speed network technology that is primarily used to connect storage components over a SAN. Fibre channel solutions require dedicated network storage devices that are not typically accessed through other LAN devices. Fibre channel is commonly used for vSphere VMFS Datastores and boot LUNs for ESXi. vSphere provides native support for fibre channel protocols and for fibre channel over Ethernet (FCoE). It supports fibre channel speeds from 2 Gbps to 16Gbps

- Fibre channel over Ethernet – In FCoE, the fibre channel traffic is encapsulated into Ethernet frames and the FCoE frames are converged with networking traffic. By enabling the same ethernet link to carry both fibre channel and ethernet traffic, FCoE increases the use of the Ethernet Infrastructure. FCoE also reduces the total number of network ports used in the network environment.

- iSCSI – uses Ethernet connections between computer systems or host servers, and high performance storage systems. iSCSI is commonly used for VMFS datastores. It does not require special purpose cables and can also be run over long distances. You can also do thin provisioning on iSCI LUNs, whereas this is not possible with NFS. ESXi provides native support for iSCSI through the following iSCSI initiators: Independent iSCSI initiators, Dependent iSCSI initiators and iSCSI software initiators

- Network File System – NFS is an IP-based file sharing protocol that is used by NAS to allow multiple remote systems to connect to a shared file system. It uses the file-level data access and the target NAS device controls the storage device. NFS is the only vSphere supported NAS protocol which supports NFSv3 over TCP/IP along with simultaneous host access NFS volumes. You cannot initialize or format a NAS target from a remote server. The NAS server is responsible for the file system where the data is stored.

- Local Storage – Can be internal hard disks located inside your ESXi host, or it can be direct attached storage. Local storage does not require a storage network to communicate with your host. You need a cable connected to the storage unit and, when required, a compatible HBA in your host.

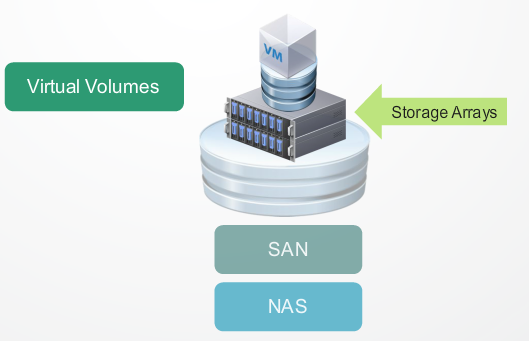

- Virtual Volumes – A new integration and management framework that virtualizes SAN/NAS arrays, enabling a more efficient operational model that is optimized for virtualized environments. Virtual volumes simplifies storage operations by automating manual tasks. It provides administrators with finer control of storage resources and data services at the VM level, simplifying the delivery of storage service levels to applications.

These storage protocols gives you the choice and flexibility to adapt to changing IT environments. Each storage options has its own strengths and weaknesses.

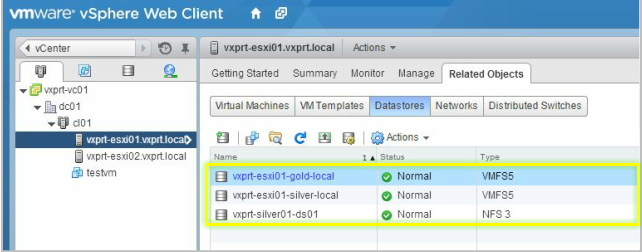

Datastores

In vSphere VM are stored in containers called datastores.These are logical volumes that allows you to organize the storage of your ESXi hosts and virtual machine. You can browse the datastores to download/upload files within the vSphere web client.

Data stores can also be used to store ISO images, VM templates and floppy images. Depending on the type of storage, datastores can be of the following type:

- VMFS datastores – The vSphere VMFS format is a special high performance file system format that is optimized for storing virtual machines. You use the VMFS format to deploy datastores on block storage devices. A VMFS datastore can be extended to expand several physical storage. This feature allows you to pool storage and gives you flexibility in creating the datastore necessary for your virtual machines.

- NFS datastores – You can use NFS volumes to store and boot virtual machines in the same way that you use VMFS datastores. The maximum size of NFS datastores depends on the support that an NFS server provides. ESXi does not impose any limit on the NFS datastores size.

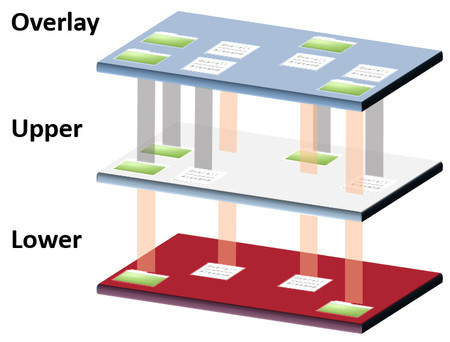

- Virtual SAN datastores – Virtual volumes virtualizes SAN and NAS devices by abstracting physical hardware resources into pools of capacity known as virtual datastores. The virtual datastores defines capacity boundaries, access logic and exposes a set of data services accessible to the virtual machines provisioned in the pool. Virtual datastores are purely logical constructs that can be configured on the fly, when needed, without disruption and don’t require formatting with a file system.

Virtual SAN

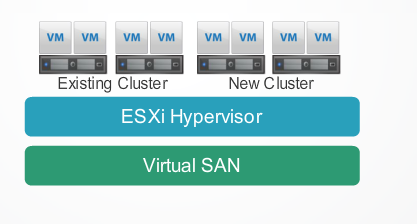

Virtual SAN is a distributed layer of software that runs natively as parts of the ESXi hypervisor. You can activate Virtual SAN when you create host clusters. Alternatively, you can enable virtual SAN on existing clusters.

The hosts in the virtual SAN need not be identical. Even the hosts within the virtual SAN cluster that have no local disks can participate and run their VM on the virtual SAN datastores. When enabled, virtual SAN works with the VM storage policy. It monitors and reports on policy compliance during the life cycle of the VM. If the policy becomes non-compliant because of a host, disk or network failure or workload changes, virtual SANs take remedial actions. Virtual SAN can be configured as hybrid or All flash storage.

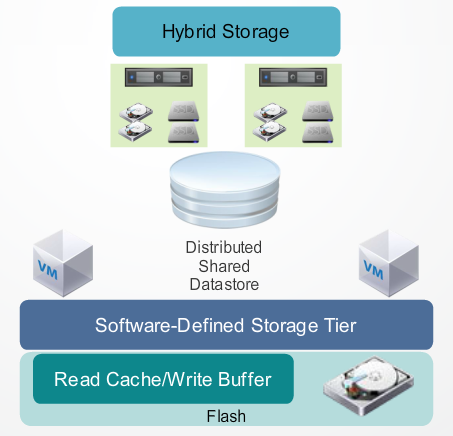

Hybrid Storage – In a hybrid storage architecture, virtual SAN pools server attached hard disk drives (HDDs or SSDs) to create a distributed shared datastores that abstract the storage hardware to create a Software-Defined Storage Tier for virtual machines. Flash is used as a read cache / write buffer to accelerate performance and magnetic disk provide data persistance.

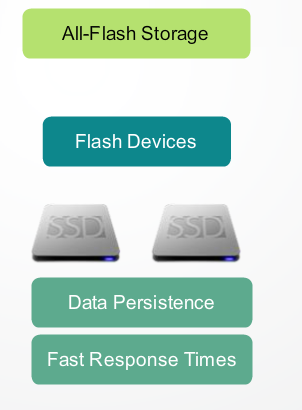

All-Flash Storage – In this architecture, Flash devices are intelligently used as a write cache, whilst SSDs provide data persistence and consistent fast response times.

Virtual SAN is a separate paid offering and not included in vSphere.

Virtual Volumes

Virtual Volumes is a new virtual machine disk management and integrated framework that enables array based operations at the virtual disk level. It makes SAN and NAS storage system capable of being managed at a virtual machine level. With Virtual Volumes, most data operations are offloaded to the storage arrays.

Virtual Volumes eliminates the need to manage large number of LUNs or volumes per host. This reduces operational overhead whilst enabling scalable data services on a per VM-level.

Storage Policy Based Management (SPBM) is a key technology that works in conjunction with Virtual Volumes. This framework provides direct resource allocation and management of storage related services. Administrators can specify a set of storage requirements and capabilities for any particular virtual machine to match service levels required by hosted applications