I made my best to break the VMware vSphere 6.0 tutorial into related parts so that it is more easy to grasp the important materials. So far articles already published are:

- An introduction of Data Center Virtualization

- Components of VMware vSphere 6.0 – part 1 which include an overview of Vsphere 6.0, its architecture, Topology and configuration maximums.

- Components of VMware vSphere 6.0 – part 2 which include an introduction to vCenter servers and its features.

- Components of VMware vSphere 6.0 – part 3 which include Shared Storage, Shared Protocols, Data Stores, Virtual SANs and Virtual Volumes

- Components of VMware vSphere 6.0 - part 4 which include Networking feature in vSphere, Virtual Networking, Virtual Switches, Virtual Switch types and Introduction to NSX

In this article i will continue on with the following aspects:

- vSphere Resource Management Features

- vMotion

- Distributed Resource Scheduler

- Distributed Power Management

- Storage vMotion

- Storage DRS

- Storage I/O control

- Network I/O control

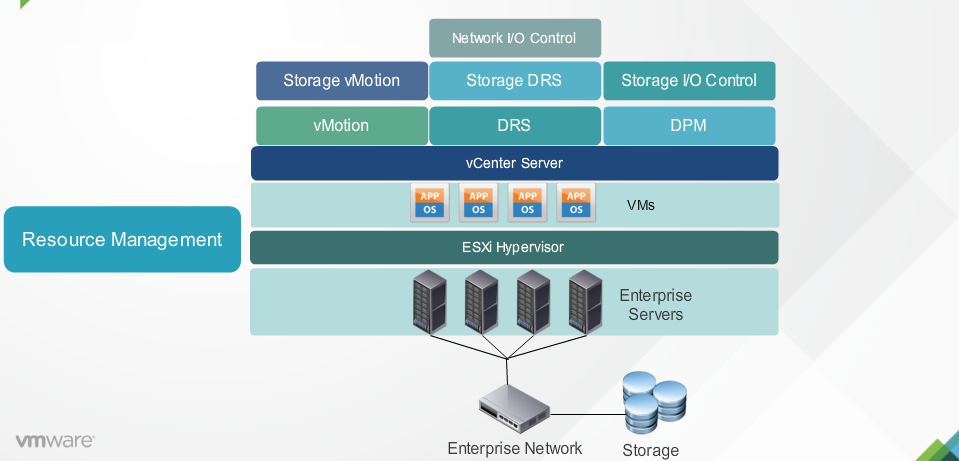

vSphere Resource Management Features

Resource Management is the allocation of resource from resource providers to resource consumers. Resource management allows you to dynamically re-allocate resources so that you can more efficiently use available capacity. The various features that allows to perform resource management include vMotion, DRS, DPM, Storage vMotion, Storage DRS, Storage I/O control and Network I/O control.

[caption id="attachment_1756" align="aligncenter" width="959"]

vMotion

vMotion allows you to migrate one virtual machine from one ESXi host to another without any downtime. You can also change both the host and the datastore of the virtual machine during vMotion. When you migrate virtual machine with vMotion, ensues to change only the host. The active memory and precise execution of the virtual machine is rapidly transferred to the new host. vMotion suspends the source of the virtual machine, copies the Bitmap to the new ESXi host and resumes the virtual machine on its new host. The associated virtual disk remains at the same location on storage that are shared between the two hosts. Only the RAM and the system state are copied to the new host.When you change both the host and the datastore, the active memory and VM execution state is copied to the new host and the virtual disk is move to another datastore.

vMotion migration to another host is possible in vSphere environments without shared storage. The different types of vMotion include:

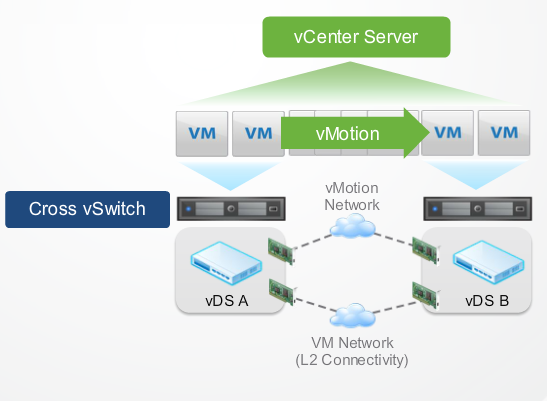

Cross vSwitch vMotion

This allows you to seamlessly migrate a VM across different virtual switches while performing a vMotion.

[caption id="attachment_1765" align="aligncenter" width="547"]

Cross vCenter vMotion

This allows you to migrate VMs from a host server that is managed by one vCenter Server to a host managed by another vCenter Server

[caption id="attachment_1767" align="aligncenter" width="597"]

Long Distance vMotion

This allows you to migrate a WAN to another site. The latency across site is 150ms RTT (Round Trip Time)

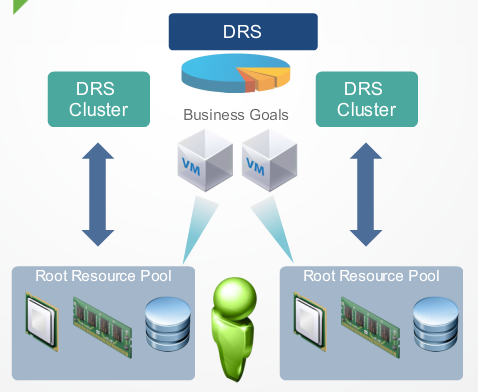

DRS - Distributed Resource Scheduler

Aggregates computing capacity across a collection of servers into logical resource pools. It intelligently allocate available resources among the virtual machine based on predefined rules that reflect the business needs and changing priorities. Each DRS cluster has a root resource pool that group the resources of that cluster. The resource pools decide how VMs share memory resources. A resource pool allows you as an administrator to divide and allocate resources to virtual machines and other resource pools. A resource pool allows you to control the aggregate CPU and memory resources of DRS cluster.Resource pool also allows you to prioritize resources to highest value application in order to align resources to business goals automatically.

[caption id="attachment_1768" align="aligncenter" width="478"]

When a virtual machine experience an increase load or there is pressure or scarcity of available resources on its current host server, DRS may redistribute some virtual machines across some of the host servers to satisfy the resource needs of the consuming virtual machine. DRS offers several benefits:

- It helps offers efficiency of the data center by automatically optimizing hardware utilization and respond to changing condition.

- It also allows you to dedicate resources to business units whilst still profiting higher hardware utilization to resource pooling.

- With DRS, you can conduct zero downtime server maintenance and lower power consumption cost by up to 20 %.

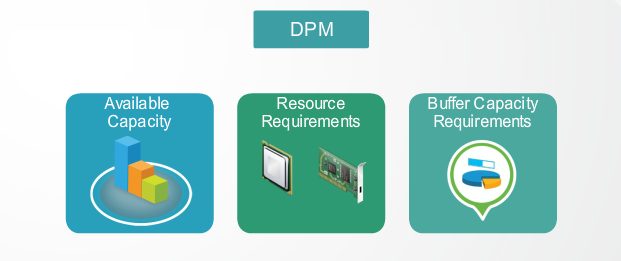

DPM - Distributed Power Management

DPM is a feature of VMware DRS that continuously monitors resource requirements in a DRS cluster. When resource requirements of the cluster decrease during periods of low usage, VMware DPM consolidates workload to reduce the power consumed by the cluster. When resource requirements of workloads increase during periods of high usage VMware DPM bring powered down hosts back on-line to ensure that service levels are met. DPM compare the available capacity in a DRS cluster against resource requirements of virtual machines plus some administrator defined buffer capacity requirements.

[caption id="attachment_1770" align="aligncenter" width="621"]

If DPM detects that there are too many hosts powered on, it will consolidates virtual machines onto fewer host and power off the remaining machines. VMware DPM bring powered off hosts online once again to meet virtual machines requirements either at a predefined time or when it senses increase in virtual machine requirements.

Storage vMotion

Storage vMotion enable you to migrate virtual machines and its disk files from one datastore to another while the virtual machine is running. It perform the virtual machine migration with zero downtime, continuous service availability and complete transaction integrity. With Storage vMotion, you can choose to place the virtual machine and all of its disks in a single location or select separate locations for the virtual machines configuration file and each virtual disk. Storage vMotion enables you to perform pro-active storage migrations, simplified array migrations and improve virtual machine storage performance. Storage vMotion is fully integrated with VMware vCenter server to provide easy migration and monitoring.

Storage DRS

Storage DRS provides smart virtual machine placement and load balancing mechanisms based on IO and space capacity. It helps decrease operational effort associated with the provisioning of virtual machines and monitoring of the storage environments. Storage DRS allows you to monitor the storage habits of the virtual machine and to keep the storage devices more evenly loaded. It is a load balancer just like DRS but focusses on storage devices. It monitors disk IO latency on a shared storage devices to determine if a particular device is overburdened. Storage DRS distributes the load in storage clusters to ensure smooth performance. It can be set up to work either on manual of fully automatic mode.

- Manual mode - In the manual mode, Storage DRS makes balancing recommendations that an administrator may approve.

- Automatic mode - In the automatic mode, Storage DRS makes storage vMotion decisions to lower I/O latency to keep all virtual machines performing optimally.

Storage I/O control (SIOC)

SIOC provides storage I/O performance isolation for virtual machines and enables administrators to comfortably run important workload in a highly consolidated virtualized storage environment. It protects all virtual machines from undoing negative performance impact due to misbehaving I/O heavy virtual machines. Note that SIOC can have negative performance if it is not set properly. SIOC protect the service level of critical virtual machine by giving them preferential I/O resource allocation during periods of congestion. It achieves this benefits by extending the constructs of shares and limits used extensively for CPU and Memory to manage the allocation of storage I/O resources. SIOC can trigger device latency that monitoring that hosts observe when communicating with datastore. When latency exceeds a set threshold, the feature engages to relieved congestion. Each virtual machine that accesses that datastore is then allocated I/O resources in proportion to their shares. SIOC enables administrators to mitigate the performance loss of critical workloads due to high congestion and storage latency during peak load periods. The use of SIOC will produce better and more predictable performance behaviour for workload during period of congestions.

Network I/O control (NIOC)

Administrators and architects need a simple and reliable way to enable prioritization of critical traffic over the physical network if and when contention of those physical resources occurs. NIOC addresses this challenge by introducing a software approach to partitioning physical network bandwidth among different types of network traffic flows. It does so by providing appropriate QOS policies enforcing traffic isolation, predictability and prioritization thereby helping IT organizations overcome the contention resulting from consolidation. NIOC allows you to ensure a given flow will never be allow to dominate over others which prevents drops and undesire jitters. It also allows Flexible Network Partitioning to help users deal with overcommitment when flows compete aggressively for the same resources. NIOC enforces traffic bandwidth limit on the overall VDS set of dvUplinks and uses them efficiently for network capacity by load based teaming.